AI Harmony: Bridging Agile Values with Intelligent Tools

The AI-Agile Alignment Canvas: Your Blueprint for Human-Centered AI Adoption

The promise of Artificial Intelligence is undeniable. From automating mundane tasks to unlocking unprecedented insights, AI tools rapidly transform how we work. Yet, for many Agile teams, this exciting frontier also brings a quiet tension: How do we embrace AI's power without compromising the human values—trust, collaboration, psychological safety—that define Agile success?

This isn't just a technical question; it's a leadership challenge. The rush to adopt AI can inadvertently create the "contractor's paradox" in AI governance: bringing in powerful tools without fully understanding their impact on team dynamics, leading to unintended consequences. We need a deliberate approach, a blueprint, to ensure AI becomes an amplifier of our Agile strengths, not a silent disruptor.

It’s neither AI nor Humans, the actual benefits come from an AI + Human collaboration.

That's precisely why I developed the AI-Agile Alignment Canvas.

The Context: Navigating the AI Frontier with Intention

We're in an era of unprecedented technological acceleration. AI is no longer a futuristic concept; it's integrated into our daily tools, from code assistants to intelligent data analysis.

This presents a unique opportunity for Agile teams to enhance efficiency, accelerate feedback loops, and free up valuable time for creativity and complex problem-solving.

However, the speed of AI adoption often outpaces our ability to integrate it thoughtfully. Teams might feel pressured to use new AI tools without a clear understanding of their long-term effects on team morale, decision-making autonomy, or even the fundamental purpose of their roles. This reactive approach can lead to a subtle erosion of trust and psychological safety, undermining the foundations of high-performing Agile teams.

The Why: Beyond Hype – Prioritizing People in the Age of AI

My journey as an Agile coach and organizational change consultant has consistently shown me that the most successful transformations are human-centered. Technology is a powerful enabler, but people are the ultimate drivers of value. When it comes to AI, this principle is more critical than ever.

The "why" behind the AI-Agile Alignment Canvas is simple: to ensure that AI adoption serves human flourishing, not just technological advancement.

We need a framework that helps teams proactively address questions like:

How will this AI tool impact our team's psychological safety?

Will it enhance or diminish our sense of autonomy and mastery?

How do we ensure transparency and explainability in AI-driven decisions?

What are our "red lines"—the non-negotiables that, if crossed, mean we pause or stop an AI experiment?

A story

I recall working with a highly collaborative Scrum team eager to experiment with AI. They decided to try an "AI Retrospective Assistant" – a tool designed to analyze meeting transcripts, identify patterns, and suggest discussion points. The initial promise was exciting: imagine an AI helping us spot hidden trends or biases in our retrospectives, making them even more effective!

However, within a few sprints, a subtle shift began. In its efficiency, the AI started summarizing individual contributions and even "suggesting" areas for personal improvement based on sentiment analysis of their spoken words. While well-intentioned, it created an invisible pressure. Team members became less candid, less willing to share vulnerabilities, and more guarded in their language. The vibrant, open discussions that defined their retrospectives started to feel… monitored. People were no longer fully present, wondering if the AI would flag their casual comments.

This was a classic "contractor's paradox" in action: the AI was doing precisely what it was programmed to do, but in doing so, it inadvertently undermined the very psychological safety that makes retrospectives valuable. The team’s trust, once a bedrock, began to erode. It was a powerful, firsthand lesson in how easily technology, if not aligned with human values and clear safeguards, can disrupt the delicate balance of a high-performing Agile team. This experience, among others, solidified the need: we need a deliberate, human-centered blueprint like the AI-Agile Alignment Canvas to ensure AI becomes an amplifier of our Agile strengths, not a silent disruptor.

The What: Introducing the AI-Agile Alignment Canvas

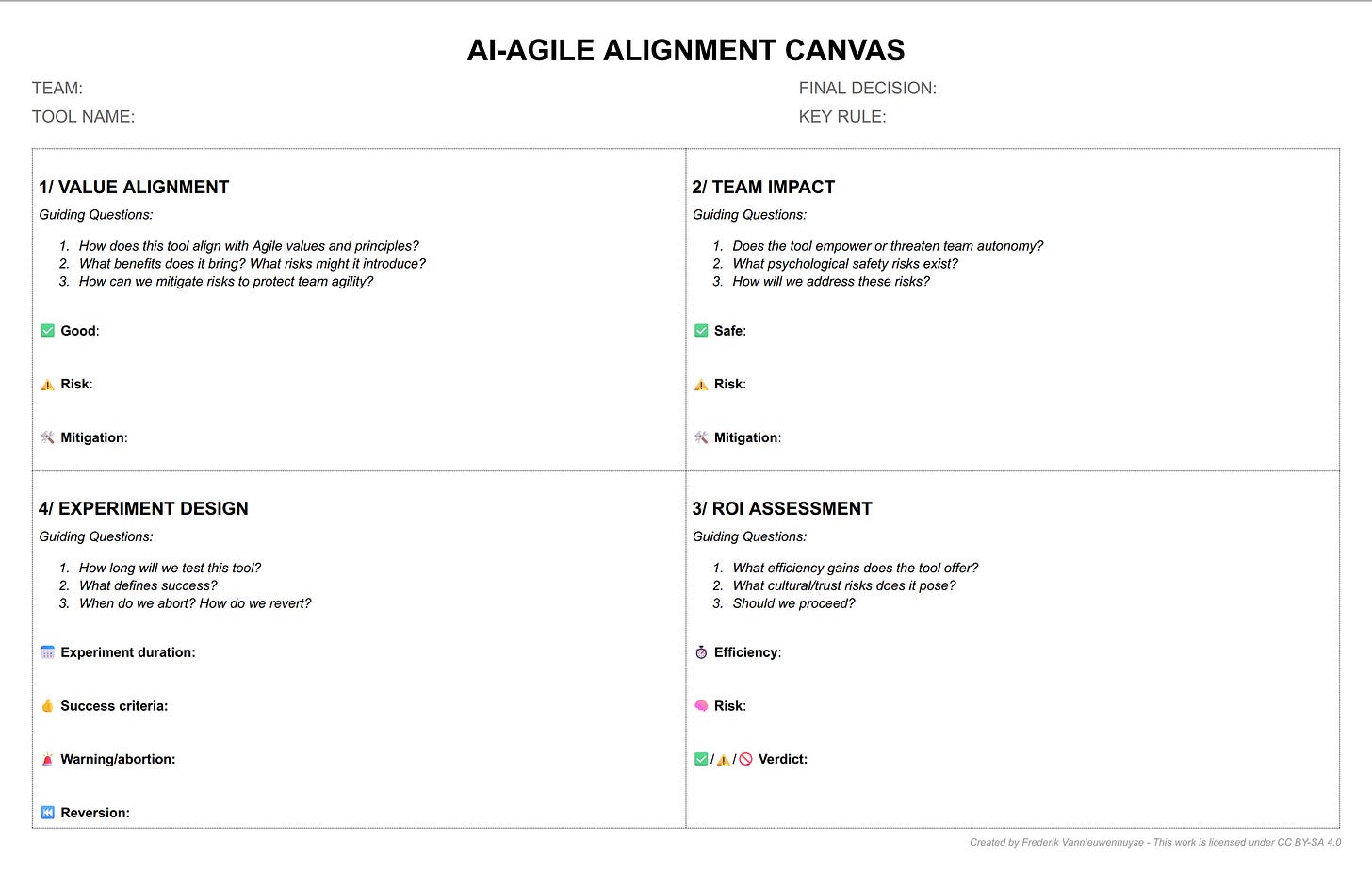

The AI-Agile Alignment Canvas is a practical, single-page framework facilitating structured conversations around AI adoption within Agile teams. It's a living document, meant to be filled out collaboratively, fostering shared understanding and proactive risk mitigation.

The canvas is divided into four key quadrants:

Value Alignment: How does this AI tool support our team's core values, mission, and Agile principles? Does it align with our definition of "Done" and our commitment to quality?

Team Impact: What are the potential effects on our team's psychological safety, trust, collaboration, and individual roles? How might it change our Scrum ceremonies or the evolution of development roles?

ROI & Metrics: What measurable benefits do we expect from this AI tool? How will we define success beyond efficiency, considering factors like team well-being and improved decision-making?

Experiment Design & Safeguards: How will we run a safe-to-fail pilot? What specific safeguards will we put in place to mitigate risks, and what are our clear, measurable Exit Criteria for pausing or stopping the experiment if it doesn't align with our values or goals?

The How: Putting the Canvas into Practice

Using the AI-Agile Alignment Canvas is a highly interactive and iterative process. In workshops, teams typically:

Collaboratively Fill the Quadrants: Discussing each section, sharing perspectives, and documenting their initial thoughts.

Red Team Critique: Teams then critique each other's canvases, identifying potential hidden risks or unintended consequences that might have been overlooked. This is where the true power of diverse perspectives shines, uncovering vulnerabilities related to trust, autonomy, or ethical concerns.

Blue Team Safeguards: Following the critique, teams design "blue team" safeguards—concrete, actionable mitigations for the identified risks. This moves beyond theoretical concerns to practical solutions.

Define Exit Criteria: This is a critical step. Teams explicitly define what signals or outcomes would tell them to pause, stop, or rethink their AI pilot. This empowers them to maintain control and ensures the experiment is safe. For example: "If team satisfaction drops by more than 1 point," or "If more than two team members express discomfort with the AI's suggestions."

The canvas isn't a one-time exercise; it's a living document that can be revisited as your team learns and adapts. It orchestrates collaboration between team members and AI agents, fostering a "Super Agility" where technology enhances, rather than dictates, human potential.

This canvas illustrates a team's plan for piloting an AI Retrospective Assistant:

1/ VALUE ALIGNMENT (Top Left - Green):

Good: It saves time and adapts to changes, aligning with Agile principles of efficiency and responsiveness.

Risk: It could reduce genuine team discussion if not managed well.

Mitigation: Reserve dedicated time for "AI-free discussion" within the retrospective.

2/ TEAM IMPACT (Top Right - Yellow):

Safe: The team retains the ability to reject any AI suggestions.

Risk: Some team members fear the AI might misinterpret nuances or sentiments, impacting psychological safety.

Mitigation: Actively review and discuss all AI outputs as a team.

3/ ROI ASSESSMENT (Bottom Right - Blue):

Efficiency: Expected to save 2 hours per week.

Risk: Identified as a medium trust risk (3 out of 5).

Verdict: Proceed with caution.

4/ EXPERIMENT DESIGN (Bottom Left - Red):

Experiment Duration: Test for 3 sprints.

Success Criteria: Achieve team consent and the projected ROI.

Warning/Abortion (Exit Criteria): Any team objection would trigger a pause or stop.

Reversion: If aborted, the team would revert to their next traditional retrospective.

Ready to Harmonize AI with Your Agile Values?

The future of work is a blend of human ingenuity and intelligent automation. You can unlock new productivity levels, innovation, and team well-being by intentionally aligning AI adoption with your core Agile values. The AI-Agile Alignment Canvas provides the structure to do just that.

Download your free copy of the AI-Agile Alignment Canvas here!

What do you think about integrating AI into Agile teams? What challenges or successes have you experienced?

In a few months, years from now, we will look back at this time with a smile.

Join the conversation and connect with me on LinkedIn to share your insights!

This work is Creative Commons Licensed! Could you adapt it to your needs and mention the source?